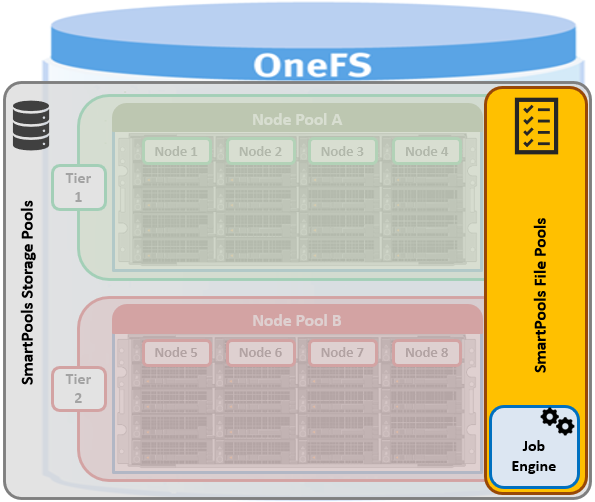

The new OneFS 9.5 release introduces the first phase of engineering’s Smarter SmartPools initiative, and delivers a new feature called SmartPools transfer limits.

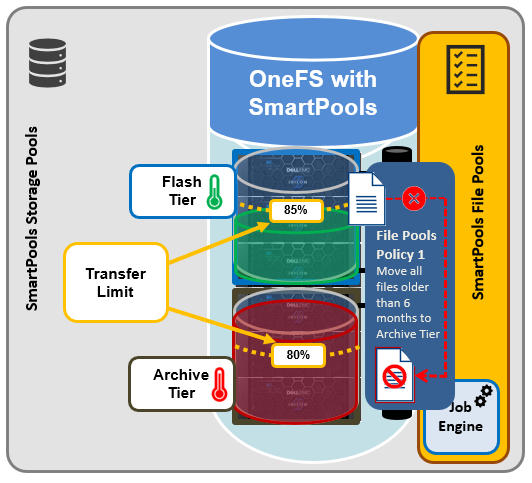

The goal of SmartPools transfer limits is to address spill over. Previously, when file pool policies were executed, OneFS had no guardrails to protect against overfilling the destination or target storage pool. So if a pool was overfilled, data would unexpectedly spill over into other storage pools.

The effects of an overflow would result in storagepool usage exceeding 100%, and the SmartPools job itself doing a considerable amount of unnecessary work, trying to send files to a given storagepool. But since the pool was full, it would then have to send those files off to another storage pool that was below capacity. This would result in data going where it wasn’t intended, and the potential for individual files to end up getting split between pools. Also, if the full pool was on the most performant storage in the cluster, all subsequent newly created data would now land on slower storage, affecting its throughput and latency. The recovery from a spillover can be fairly cumbersome since it’s tough for the cluster to regain balance, and urgent system administration may be required to free space on the affected tier.

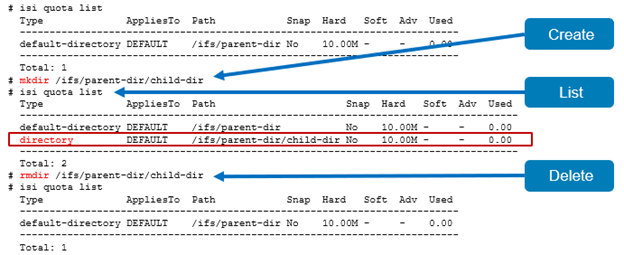

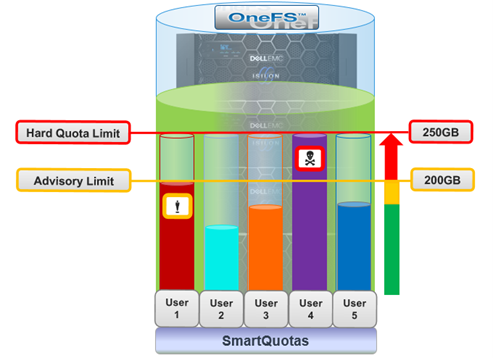

In order to address this, SmartPools Transfer Limits allows a cluster admin to configure a storagepool capacity-usage threshold, expressed as a percentage, and beyond which file pool policies stop moving data to that particular storage pool.

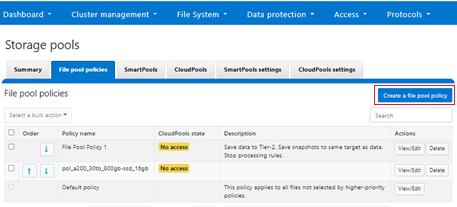

These transfer limits only take effect when running jobs that apply filepool policies, such as SmartPools, SmartPoolsTree, and FilePolicy.

The main benefits of this feature are two-fold:

- Safety, in that OneFS avoids undesirable actions, so the customer is prevented from getting into escalation situations, because SmartPools won’t overfill storage pools.

- Performance, since transfer limits avoid unnecessary work, and allow the SmartPools job to finish sooner.

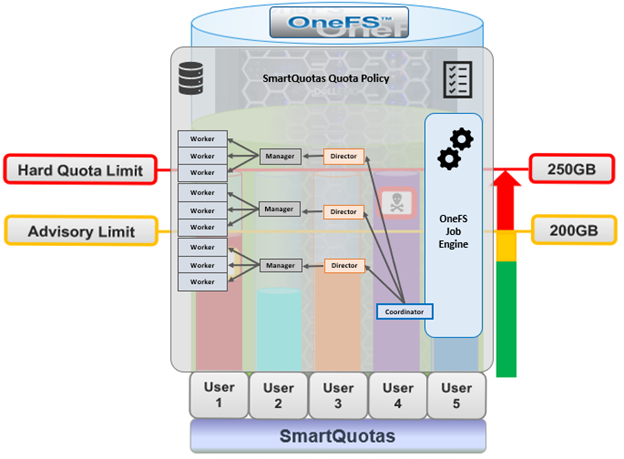

Under the hood, a cluster’s storagepool SSD and HDD usage is calculated using the same algorithm as reported by the ‘isi storagepools list’ CLI command. This means that a pool’s VHS (virtual hot spare) reserved capacity is respected by SmartPools transfer limits. When a SmartPools job is running, there is at least one worker on each node processing a single LIN at any given time. In order to calculate the current HDD and SSD usage per storagepool, the worker must read from the diskpool database. To circumvent this potential bottleneck, the filepool policy algorithm caches the diskpool database contents in memory for up to 10 seconds.

Transfer limits are stored in gconfig, and a separate entry is stored within the ‘smartpools.storagepools’ hierarchy for each explicitly defined transfer limit.

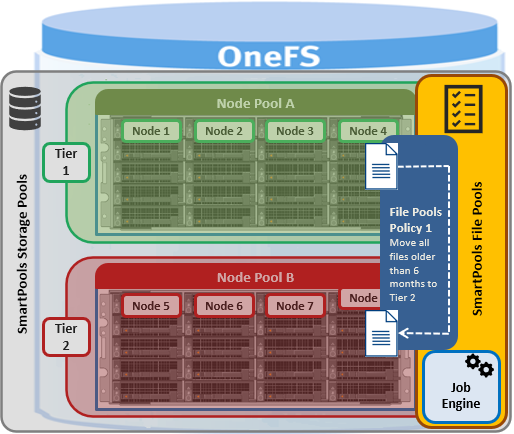

Note that in the SmartPools lexicon, ‘storage pool’ is a generic term denoting either a tier or nodepool. Additionally, SmartPools tiers comprise one or more constituent nodepools.

Each gconfig transfer limit entry stores a limit value and the diskpool database identifier of the storagepool that the transfer limit applies to. Additionally, a ‘transfer limit state’ field specifies which of three states the limit is in:

| Limit State | Description |

| Default | Fallback to the default transfer limit. |

| Disabled | Ignore transfer limit. |

| Enabled | The corresponding transfer limit value is valid. |

A SmartPools transfer limit does not affect the general ingress, restriping, or reprotection of files, regardless of how full the storage pool is where that file is located. So if you’re creating or modifying a file on the cluster, it will be created there anyway. This will continue up until the pool reaches 100% capacity, at which point it will then spill over.

The default transfer limit is 90% of a pool’s capacity, and this applies to all storage pools where the cluster admin hasn’t explicitly set a threshold. Another thing to note is that the default limit doesn’t get set until a cluster upgrade to OneFS 9.5 has been committed. So if you’re running a SmartPools policy job during an upgrade, you’ll have the preexisting behavior, which is send the file to wherever the file pool policy instructs it to go. It’s also worth noting that, even though the default transfer limit is set on commit, if a job was running over that commit edge, you’d have to pause and resume it for the new limit behavior to take effect. This is because the new configuration is loaded lazily when the job workers are started up, so even though the configuration changes, a pause and resume is needed to pick up those changes.

SmartPools itself needs to be licensed on a cluster in order for transfer limits to work. And limits can be configured at the tier or nodepool level. But if you change the limit of a tier, it automatically applies to all its child nodepools, regardless of any prior child limit configurations. The transfer limit feature can also be disabled, which results in the same spillover behavior OneFS always displayed, and any configured limits will not be respected.

Note that a filepool policy’s transfer limits algorithm does not consider the size of the file when deciding whether to move it to the policy’s target storagepool, regardless of whether the file is empty, or a large file. Similarly, a target storagepool’s usage must exceed its transfer limit before the filepool policy will stop moving data to that target pool. The assumption here is that any storagepool usage overshoot is insignificant in scale compared to the capacity of a cluster’s storagepool.

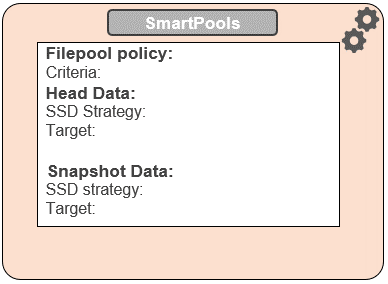

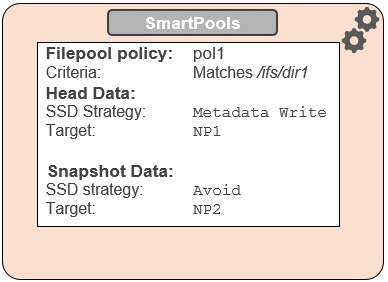

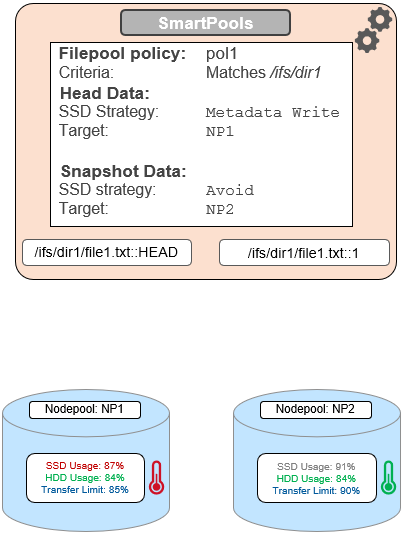

A SmartPools file pool policy allow you to send snapshot or HEAD data blocks to different targets, if so desired.

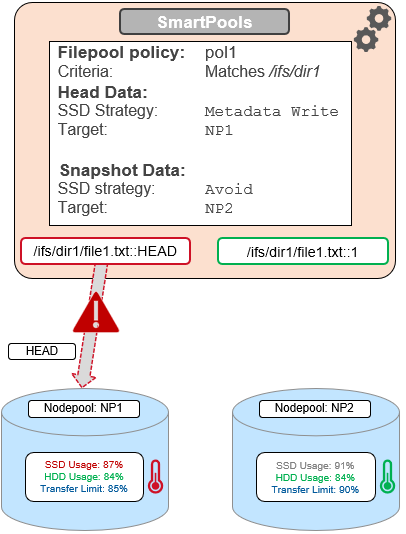

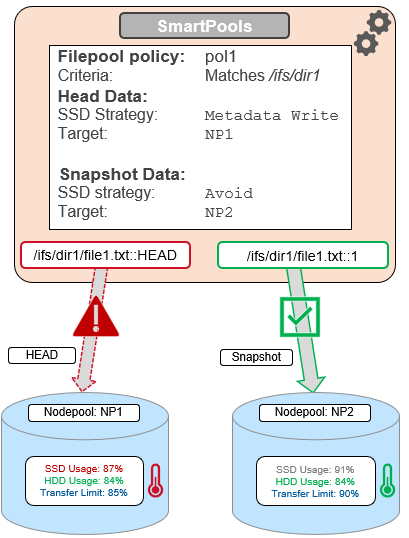

Because the transfer limit applies to the storagepool itself, and not to the file pool policy, it’s important to note that, if you’ve got varying storagepool targets and one file pool policy, you may have a situation where the head data blocks do get moved. But if the snapshot is pointing at a storage pool that has exceeded its transfer limit, it’s blocks will not be moved.

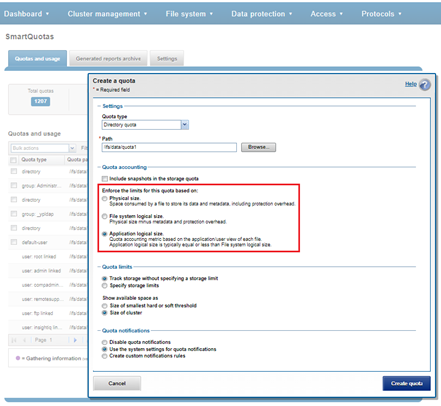

File pool policies also allow you to specify how a mixed node’s SSDs are used: Either as L3 cache, or as an SSD strategy for head and snapshot blocks. If the SSDs in a node are configured for L3, they are not being used for storage, so any transfer limits are irrelevant to it. As an alternative to L3 cache, SmartPools offers three main categories of SSD strategy: Avoid, which means send all blocks to HDD, Data, which means send everything to SSD, and then metadata read or read-write, which send varying numbers of metadata mirrors to SSD, and data blocks to hard disk.

To reflect this, SmartPools transfer limits are slightly nuanced when it comes to SSD strategies. That is, if the storagepool target contains both HDD and SSD, the usage capacity of both mediums needs to be below the transfer limit in order for the file to be moved to that target. For example, take two node pools, NP1 and NP2.

A file pool policy, Pol1, is configured, that matches all files under /ifs/dir1, with an SSD strategy of metadata write, and pool NP1 as the target for HEAD’s data blocks. For snapshots, the target is NP2, with an ‘avoid’ SSD strategy, so just writing to hard disk for both snapshot data and metadata.

When a SmartPools job runs and attempts to apply this file pool policy, it sees that SSD usage is above the 85% configured transfer limit for NP1. So, even though the hard disk capacity usage is below the limit, neither HEAD data nor metadata will be sent to NP1.

For the snapshot, the SSD usage is also above the NP2 pool’s transfer limit of 90%.

However, since the SSD strategy is ‘avoid’, and because the hard disk usage is below the limit, the snapshot’s data and metadata get successfully sent to the NP2 HDDs.