This is this article we’re looking at the good and not-so-good sides of the data warehouse and its potential replacement, the data lakehouse. In this article, we’re checking out the things the data lakehouse needs to meet if it’s going to fully replace the traditional warehouse.

The initial article “What is Data Lakehouse?” introduces the data warehouse and examines its unique features. In the second article “Explaining Data Lakehouse as Cloud-native DW“, we explore data lakehouse architecture, aiming to adjust the essential requirements of data warehouse architecture to align with the priorities of cloud-native software design. Moving on, the fourth article will focus on the role of data modelling in creating, maintaining, and utilizing the lakehouse. Lastly, the final article will evaluate both the differences and the equally important similarities between the lakehouse and the platform-as-a-service (PaaS) data warehouse.

A Quick Recap of Data Lakehouse Architecture

The ideal data lakehouse architecture is like a puzzle where each piece works independently, unlike the classic data warehouse architecture. When I say “ideal,” I mean the perfect design of this architecture. For instance, it breaks down the data warehouse capabilities into basic software functions (explained in the “Explaining Data Lakehouse as Cloud-native DW”) that operate as separate services.

These services are “loosely coupled,” meaning they communicate through well-designed APIs. They don’t need to know the internal details of the other services they interact with. Loose coupling is a fundamental principle of cloud-native software design, as discussed in previous articles. The ideal lakehouse is created by stacking these services on top of each other, allowing us, in theory, to replace one service’s functions with another.

An alternative, practical approach links the data lake and data lakehouse services. Prominent providers like Databricks and Dremio have adopted this approach in their combined data lake/house implementations. This practical method has advantages compared to the ideal data lakehouse architecture, as we’ll explore.

It’s crucial to understand that while the tightly connected nature of a classic data warehouse has downsides, it also has advantages. Loose coupling can be a point of failure, especially when coordinating multiple, transaction-like operations in a distributed software architecture with independent services.

The Technical Side of Data Warehouse Architecture

Let’s break down the formal, technical requirements of data warehouse architecture. To understand if the data lakehouse can truly replace the data warehouse, we need to see if its capabilities align with these requirements.

From a data warehouse perspective, what matters most is not just getting query results quickly but ensuring these results are consistent and reproducible. Striking a balance between speed, uniformity, and reproducibility is a real challenge.

Implementing this is trickier than it sounds. That’s why solutions like Hive + Hadoop struggled as data warehouse replacements. Even distributed NoSQL systems often face issues when trying to step into the shoes of traditional databases or data warehouses.

Now, let’s go through the specific requirements of data warehouse architecture:

- Central Data Repository: It serves as a single, central storage for business data, both current and historical.

- Panoptic View: Allows a comprehensive view across the entire business and its functional areas.

- Monitoring/Feedback Loop: Enables monitoring and feedback mechanisms into the business’s performance.

- User Queries: Supports users in asking common or unpredictable (ad hoc) questions.

- Consistent Query Results: Ensures that everyone gets the same data through consistent and uniform query results.

- Concurrent Workloads: Handles concurrent jobs and users along with demanding mixed workloads.

- Data Management Controls: Enforces strict controls on data management and processing.

- Conflict Resolution: Anticipates and resolves conflicts arising from the simultaneous requirements of consistency, uniformity, and data processing controls.

Does the data lakehouse meet these criteria? It depends on how you implement the architecture. If you set up your lakehouse by using a SQL query service on a curated data lake section, you’ll likely address requirements 1 through 4. However, handling requirements 5 through 8, which involve enforcing consistency and managing conflicts during concurrent operations, can be challenging for this type of implementation.

Reality Check: Maintaining Data Integrity Matters

In a typical, closely connected data warehouse setup, the warehouse often uses a relational database, or RDBMS. Most RDBMSs have safeguards known as ACID, ensuring they can handle multiple operations on data simultaneously while maintaining strong consistency.

While ACID safeguards are commonly linked with online transaction processing (OLTP) and RDBMS, it’s essential to clarify that a data warehouse isn’t an OLTP system. You don’t necessarily need to set up a data warehouse on an RDBMS.

To simplify, the database engine in a data warehouse requires two things: a data store that can create and manage tables, and logic to resolve conflicts arising from concurrent data operations. It’s possible to design the data warehouse as an append-only data store, committing new records over time, like adding new rows. With this approach, you avoid concurrency conflicts by only appending new records without changing or deleting existing ones. Coordination logic ensures that multiple users or jobs querying the warehouse simultaneously get the same records.

However, in reality, the most straightforward way to meet these requirements is by using an RDBMS. An RDBMS is optimized to efficiently perform essential analytical operations, like various types of joins. This is why the traditional on-premises data warehouse is often synonymous with the RDBMS. Attempts to replace it with alternatives like Hadoop + Hive have typically fallen short.

It’s also why nearly all Platform-as-a-Service (PaaS) data warehouse services mimic RDBMS systems. As mentioned in a Explaining Data Lakehouse as Cloud-native DW article, if you choose to avoid in-database ACID safeguards, you must either build ACID logic into your application code, create and manage your own ACID-compliant database, or delegate this responsibility to a third-party database. In essence, maintaining data integrity is crucial.

Ensuring Data Consistency in Workloads

Whether we like it or not, production data warehouse workloads demand consistency, uniformity, and replicability. Imagine core business operations regularly querying the warehouse. In a real-world scenario, the data lakehouse replacing it must handle hundreds of such queries every second.

Let’s break it down with an example – think of a credit application process that queries the lakehouse for credit scores multiple times per second. Statutory and regulatory requirements demand that simultaneous queries return accurate results, using the same scoring model and point-in-time data adjusted for customer variations.

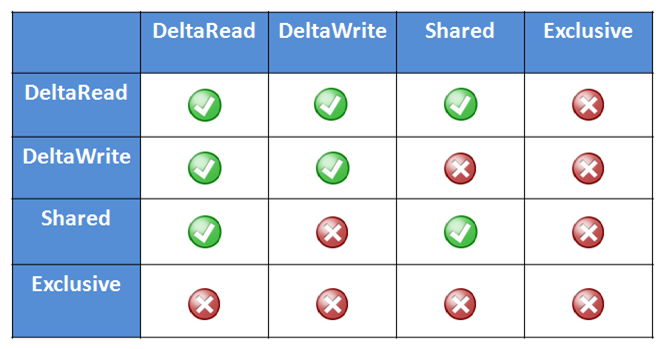

Now, what if a concurrent operation tries to update the data used for the model’s parameters? In a traditional RDBMS setup, ACID safeguards ensure this update only happens after committing the results of dependent credit-scoring operations.

Can a SQL query service do the same? Can it maintain these safeguards even when objects in the data lake’s curated zone are accessible to other services, like an AWS Glue ETL service, which may update data simultaneously?

This example is quite common in real-world scenarios. In simple terms, if you want consistent, uniform, and replicable results, you need ACIDic safeguards. This is why data warehouse workloads insist on having these safeguards in place.

Can Data Lakehouse Architecture Ensure These Safeguards?

The answer isn’t straightforward. The first challenge revolves around the difficulty of coordinating operations across loosely connected services. For instance, how can an independent SQL query service limit access to records in an independent data lake service? This limitation is crucial to prevent multiple users from changing items in the lake’s curated area. In a tightly connected RDBMS, the database kernel handles this by locking rows in the table(s) where dependent data is stored, preventing other operations from altering them. The process is not as clear-cut in data lakehouse architecture with its layered stack of detached services.

A well-designed data lakehouse service should be able to enforce safeguards similar to ACID—especially if it controls concurrent access and modifications to objects in its data lake layer. Databricks and Dremio have addressed this challenge in their data lakehouse architecture implementations. They achieve this by reducing the loose coupling between services, ensuring more effective coordination of concurrent access and operations on shared resources.

However, achieving strong consistency becomes much tougher when the data lakehouse is structured as a stack of loosely connected, independent services. For example, having a distinct SQL query service on top of a separate data lake service, which sits on its own object storage service. In such a setup, it becomes challenging to ensure strong consistency because there’s limited control over access to objects in the data lake.

Closing Thoughts: Navigating Distributed Challenges

In any distributed system, the main challenge is coordinating simultaneous access to shared resources while handling various operations on these resources across different locations and times. This applies whether software functions and their resources are closely or loosely connected.

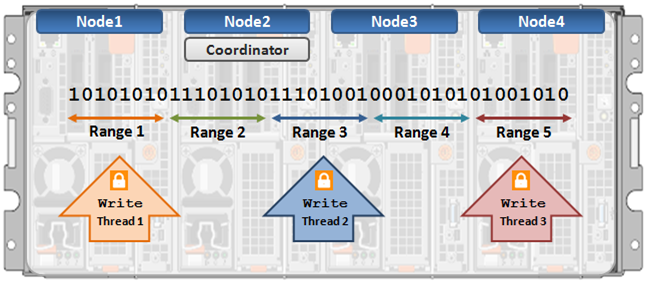

For instance, a classic data warehouse tackles distributed processing by becoming a massively parallel processing (MPP) database. The MPP database kernel efficiently organizes and coordinates operations across nodes in the MPP cluster, resolving conflicts between operations. In simple terms, it makes sure it can enforce strict ACID safeguards while dealing with multiple operations happening at the same time across different places.

On the flip side, a loosely connected distributed software architecture, like data lakehouse architecture, deals with the challenge of coordinating access and managing dependencies across essentially independent services. It’s a tricky problem.

This complexity is one reason why the data lakehouse, much like the data lake itself, typically operates as what’s called an eventually consistent platform rather than a strongly consistent one.

On one hand, it can enforce ACID-like safeguards; on the other hand, it may lose data and struggle to consistently replicate results. Enforcing strict ACID safeguards would mean combining the data lakehouse and the data lake into one platform—closely connecting both services to each other. This seems to be the likely direction in the evolution of data lake/lakehouse concepts, assuming the idea of the data lakehouse sticks around.

However, implementing the data lakehouse as its own data lake essentially mirrors the evolution of the data warehouse. It involves closely connecting the lakehouse and the lake, creating a dependency on a single software platform and provider.

Stay tuned for the next article in this series, where we’ll explore the use of data modeling with the data lakehouse.