The basic ability to export a cluster’s configuration, which can then be used to perform a config restore, has been available since OneFS 9.2. However, OneFS 9.7 sees an evolution of the cluster configuration backup and restore architecture plus a significant expansion in the breadth of supported OneFS components, which now includes authentication, networking, multi-tenancy, replication, and tiering:

A configuration export and import can be performed via either the OneFS CLI or platform API, and encompasses the following OneFS components for configuration backup and restore:

| Component | Configuration / Action | Release |

| Auth | Roles: Backup / Restore

Users: Backup / Restore Groups: Backup / Restore |

OneFS 9.7 |

| Filepool | Default-policy: Backup / Restore

Policies: Backup / Restore |

OneFS 9.7 |

| HTTP | Settings: Backup / Restore | OneFS 9.2+ |

| NDMP | Users: Backup / Restore

Settings: Backup / Restore |

OneFS 9.2+ |

| Network | Groupnets: Backup / Restore

Subnets: Backup / Restore Pools: Backup / Restore Rules: Backup / Restore DNScache: Backup / Restore External: Backup / Restore |

OneFS 9.7 |

| NFS | Exports: Backup / Restore

Aliases: Backup / Restore Netgroup: Backup / Restore Settings: Backup / Restore |

OneFS 9.2+ |

| Quotas | Quotas: Backup / Restore

Quota notifications: Backup / Restore Settings: Backup / Restore |

OneFS 9.2+ |

| S3 | Buckets: Backup / Restore

Settings: Backup / Restore |

OneFS 9.2+ |

| SmartPools | Nodepools: Backup

Tiers: Backup Settings: Backup / Restore |

OneFS 9.7 |

| SMB | Shares: Backup / Restore

Settings: Backup / Restore |

OneFS 9.2+ |

| Snapshots | Schedules: Backup / Restore

Settings: Backup / Restore |

OneFS 9.2+ |

| SmartSync | Accounts: Backup / Restore

Certificates: Backup Base-policies: Backup / Restore Policies: Backup / Restore Throttling: Backup / Restore |

OneFS 9.7 |

| SyncIQ | Policies: Backup / Restore

Certificates: Backup Rules: Backup Settings: Backup / Restore |

OneFS 9.7 |

| Zone | Zones: Backup / Restore | OneFS 9.7 |

In addition to the above expanded components support, the principal feature enhancements added to cluster configuration backup and restore in OneFS 9.7 include:

- Addition of a daemon to manage backup/restore jobs.

- The ability to lock the configuration during a backup.

- Support for custom rules when restoring subnet IP addresses.

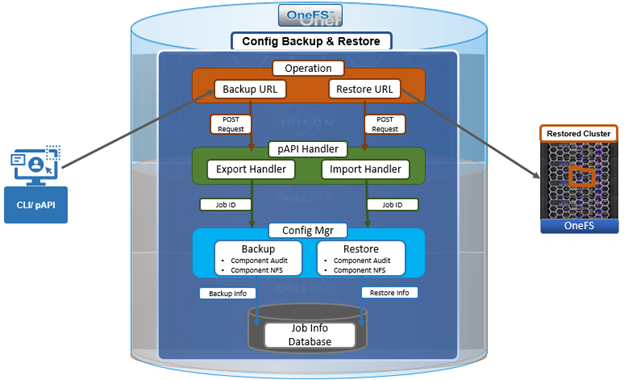

Let’s first take a look at the overall architecture. The legacy cluster configuration backup and restore infrastructure in OneFS 9.6 and earlier was as follows:

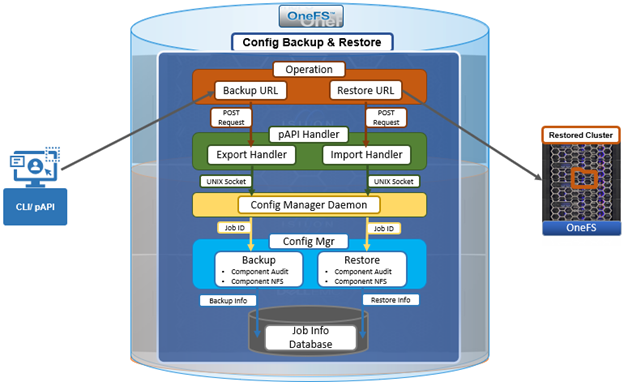

By way of contrast, OneFS 9.7 now sees the addition of a new configuration manager daemon, adding a fifth layer to the stack, and also increasing security and guarantying configuration consistency/idempotency:

The various layers in this OneFS 9.7 architecture can be characterized as follows:

| Architectural Layer | Description |

| User Interface | Allows users to submit operations with multiple choices, such as PlatformAPI or CLI. |

| pAPI Handler | Performs different actions according to the requests flowing in. |

| Config Manager Daemon | New daemon in OneFS 9.7 to manage backup and restore jobs.

|

| Config Manager | Core layer executing different jobs which are called by PAPI handlers. |

| Database | Lightweight database manage asynchronous jobs, tracing state and receiving task data. |

The new configuration management (ConfigMgr) daemon receives job requests from the platform API export and import handlers, and launches the corresponding backup and restore jobs as required. The backup and restore jobs will call a specific component’s pAPI handler in order to export of import the configuration data. Exported configuration data itself is saved under /ifs/data/Isilon_Support/config_mgr/backup/, while the job information and context is saved to a SQLite job information database that resides at /ifs/.ifsvar/modules/config_mgr/config.sqlite.

Enabled by default, the ConfigMgr daemon runs as a OneFS service, and can be viewed and managed as such:

# isi services -a | grep -i config_mgr isi_config_mgr_d Config mgr Daemon Enabled

This isi_config_mgr_d daemon is managed by MCP, OneFS’ main utility for distributed service control across a cluster.

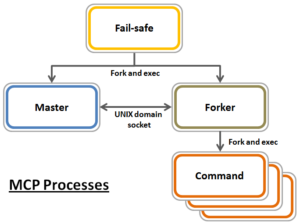

MCP is responsible for starting, monitoring, and restarting failed services on a cluster. It also monitors configuration files and acts upon configuration changes, propagating local file changes to the rest of the cluster. MCP is actually comprised of three different processes, one for each of its modes:

The ‘Master’ is the central MCP process and does the bulk of the work. It monitors files and services, including the failsafe process, and delegates actions to the forker process.

The role of the ‘Forker’ is to receive command-line actions from the master, execute them, and return the resulting exit codes. It receives actions from the master process over a UNIX domain socket. If the forker is inadvertently or intentionally killed, it’s automatically restarted by the master process. If necessary, MCP will continue trying to restart the forker at an increasing interval. If, after around ten minutes of unsuccessfully attempting to restart the forker, MCP will fire off a CELOG alert, and continue trying. A second alert would then be sent after thirty minutes.

MCP ensures the correct state of the service on a node, and since isi_config_mrg_d is marked ‘enable’ by default, it will run the start action until the PID confirms the daemon is running. MCP monitors services by observing their PID files (under /var/run), plus the process table itself, to determine if a process is already running or not, comparing this state against the ‘enabled/disabled’ configuration for the service and determining whether any start or stop actions are required.

In the event of an abnormal termination of a configuration restore job, the job status will be updated in the job info database, and MPC will attempt to restart the daemon. But if a configuration backup job fails, the daemon will assist in freeing the configuration lock, too. While the backup job is running, it will lock the configuration to prevent changes until the backup is complete, guarding against any potential race-induced inconsistencies in the configuration data. Typically the config backup job execution is swift, so the locking effect on the cluster is minimal. Also, config locking does not impact in-progress POST, PUT, DETELE changes. Once successfully completed, the backup job will automatically relinquish its configuration lock(s). Additionally, the ‘isi cluster config lock’ CLI command set can be used to both view state and manually modify (enable or disable) the configuration locks.

The other main enhancement to configuration backup and restore in OneFS 9.7 is the ability to create custom rules for restoring subnet IP addresses. This allows the assignment of different IP address from the backup when restoring the network config on a target cluster. As such, a network configuration restore will not attempt to overwrite any existing subnets and pools’ IP addresses, thus avoiding a potential connectivity disruption.

In the next article in this series we’ll take a look at the operation and management of cluster configuration backup and restore.