OneFS 9.3 introduces a new filesystem storage efficiency feature which stores a small file’s data within the inode, rather than allocating additional storage space. The principal benefits of data inlining in OneFS include:

- Reduced storage capacity utilization for small file datasets, generating an improved cost per TB ratio.

- Dramatically improved SSD wear life.

- Potential read and write performance for small files.

- Zero configuration, adaptive operation, and full transparency at the OneFS file system level.

- Broad compatibility with other OneFS data services, including compression and deduplication.

Data inlining explicitly avoids allocation during write operations since small files do not require any data or protection blocks for their storage. Instead, the file content is stored directly in unused space within the file’s inode. This approach is also highly flash media friendly since it significantly reduces the quantity of writes to SSD drives.

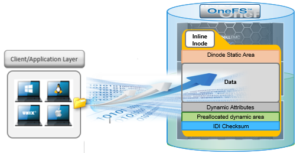

OneFS inodes, or index nodes, are a special class of data structure that store file attributes and pointers to file data locations on disk. They serve a similar purpose to traditional UNIX file system inodes, but also have some additional, unique properties. Each file system object, whether it be a file, directory, symbolic link, alternate data stream container, shadow store, etc, is represented by an inode.

Within OneFS, SSD node pools in F series all-flash nodes always use 8KB inodes. For hybrid and archive platforms, the HDD node pools are either 512 bytes or 8KB in size, and this is determined by the physical and logical block size of the hard drives or SSDs in a node pool. There are three different styles of drive formatting used in OneFS nodes, depending on the manufacturer’s specifications:

| Drive Formatting | Characteristics |

| Native 4Kn (native) | • A native 4Kn drive has both a physical and logical block size of 4096B. |

| 512n (native) | • A drive that has both physical and logical size of 512 is a native 512B drive. |

| 512e (emulated) | • A 512e (512 byte-emulated) drive has a physical block size of 4096, but a logical block size of 512B. |

If the drives in a cluster’s nodepool are native 4Kn formatted, by default the inodes on this nodepool will be 8KB in size. Alternatively, if the drives are 512e formatted, then inodes by default will be 512B in size. However, they can also be reconfigured to 8KB in size if the ‘force-8k-inodes’ setting is set to true.

A OneFS inode is composed of several sections. These include a static area, which is typically 134byes in size and contains fixed-width, commonly used attributes like POSIX mode bits, owner, and file size. Next, the regular inode contains a metatree cache, which is used to translate a file operation directly into the appropriate protection group. However, for inline inodes, the metatree is no longer required, so data is stored directly in this area instead. Following this is a preallocated dynamic inode area where the primary attributes, such as OneFS ACLs, protection policies, embedded B+ Tree roots, timestamps, etc, are cached. And lastly a sector where the IDI checksum code is stored.

When a file write coming from the writeback cache, or coalescer, is determined to be a candidate for data inlining, it goes through a fast write path in BSW. Compression will be applied, if appropriate, before the inline data is written to storage.

![]()

The read path for inlined files is similar to that for regular files. However, if the file data is not already available in the caching layers, it is read directly from the inode, rather than from separate disk blocks as with regular files.

![]()

Protection for inlined data operates the same way as for other inodes and involves mirroring. OneFS uses mirroring as protection for all metadata because it is simple and does not require the additional processing overhead of erasure coding. The number of inode mirrors is determined by the nodepool’s achieved protection policy, as per the table below:

| OneFS Protection Level | Number of Inode Mirrors |

| +1n | 2 inodes per file |

| +2d:1n | 3 inodes per file |

| +2n | 3 inodes per file |

| +3d:1n | 4 inodes per file |

| +3d:1n1d | 4 inodes per file |

| +3n | 4 inodes per file |

| +4d:1n | 5 inodes per file |

| +4d:2n | 5 inodes per file |

| +4n | 5 inodes per file |

Unlike file inodes above, directory inodes, which comprise the OneFS single namespace, are mirrored at one level higher than the achieved protection policy. The root of the LIN Tree is the most critical metadata type and is always mirrored at 8x

Data inlining is automatically enabled by default on all 8KB formatted nodepools for clusters running OneFS 9.3, and does not require any additional software, hardware, or product licenses in order to operate. Its operation is fully transparent and, as such, there are no OneFS CLI or WebUI controls to configure or manage inlining.

In order to upgrade to OneFS 9.3 and benefit from data inlining, the cluster must be running a minimum OneFS 8.2.1 or later. A full upgrade commit to OneFS 9.3 is required before inlining becomes operational.

Be aware that data inlining in OneFS 9.3 does have some notable caveats. Specifically, data inlining will not be performed in the following instances:

- When upgrading to OneFS 9.3 from an earlier release which does not support inlining, existing data will not be inlined.

- During restriping operations, such as SmartPools tiering, when data is moved from a 512 byte diskpool to an 8KB diskpool.

- Writing CloudPools SmartLink stub files.

- On file truncation down to non-zero size.

- Sparse files (for example, NDMP sparse punch files) where allocated blocks are replaced with sparse blocks at various file offsets.

- For files within a writable snapshot.

Similarly, in OneFS 9.3 the following operations may cause inlined data inlining to be undone, or spilled:

- Restriping from an 8KB diskpool to a 512 byte diskpool.

- Forcefully allocating blocks on a file (for example, using the POSIX ‘madvise’ system call).

- Sparse punching a file.

- Enabling CloudPools BCM on a file.

These caveats will be addressed in a future release.