Another significant benefactor of new functionality in the recent OneFS 9.11 release is SmartSync. As you may recall, SmartSync allows multiple copies of a dataset to be copied, replicated, and stored across locations and regions, both on and off-prem, providing increased data resilience and the ability to rapidly recover from catastrophic events.

In addition to fast, efficient, scalable protection with granular recovery, SmartSync allows organizations to utilize lower cost object storage as the target for backups, reduce data protection complexity and cost by eliminating the need for separate backup applications. Plus disaster recovery options include restoring a dataset to its original state, or cloning a new cluster.

SmartSync sees the following enhancements in OneFS 9.11:

- Automated incremental-forever replication to object storage.

- Unparalleled scalability and speed, with seamless pause/resume for robust resiliency and control.

- End-to-end encryption for security of data-in-flight and at rest.

- Complete data replication, including soft/hard links, full file paths, and sparse files

- Object storage targets: AWS S3, AWS Glacier IR, Dell ECS/ObjectScale, and Wasabi (with the addition of Azure and GCP support in a future release).

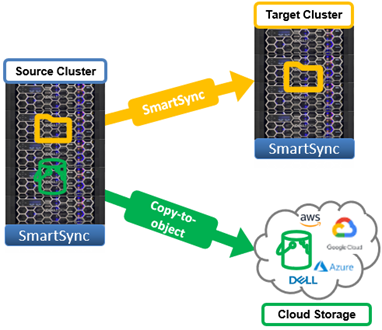

But first, a bit of background. Introduced back in OneFS 9.4, SmartSync operates in two distinct modes:

- Regular push-and-pull transfer of file data between PowerScale clusters.

- CloudCopy, copying of file-to-object data from a source cluster to a cloud object storage target.

CloudCopy copy-to-object in OneFS 9.10 and earlier releases is strictly a one-time copy tool, rather than a replication utility. So, after a copy, viewing the bucket contents from AWS, console or S3 browser yielded an object format tree-like representation of the OneFS file system. However, there were a number of significant shortcomings, such as no native support for attributes like ACLs, or certain file types like character files, and no method to represent hard links in a reasonable way. So OneFS had to work around these things by expanding hard links, and redirecting objects that had too long of a path. The other major limitation was that it really had just been a one-and-done copy. After creating and running a policy, once the job had completed the data was in the cloud, and that was it. OneFS had no provision for any incremental transfer of any subsequent changes to the cloud copy when the source data changed.

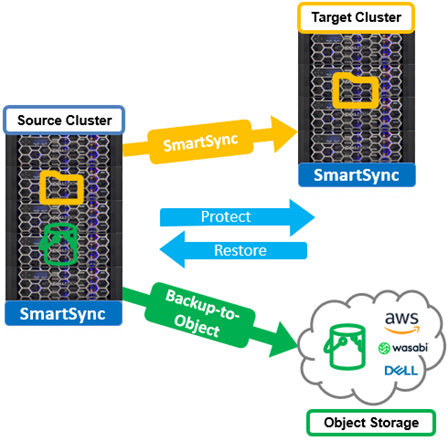

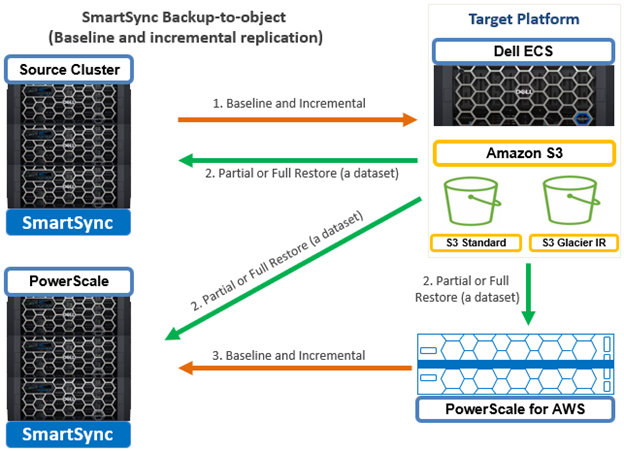

In order to address these limitations, SmartSync in OneFS 9.11 sees the addition of backup-to-object functionality. This includes a full-fidelity file system baseline, plus fast incremental replication to Dell ECS and ObjectScale, Wasabi, and AWS S3 and Glacier IR object stores.

This new backup-to-object functionality supports the full range of OneFS path lengths, encodings, and file sizes up to 16TB – plus special files and alternate data streams (ADS), symlinks and hardlinks, sparse regions, and POSIX and SMB attributes.

| Copy-to-object (OneFS 9.10 & earlier) | Backup-to-object (OneFS 9.11) |

| · One-time file system copy to object

· Baseline replication only, no support for incremental copies · Browsable/accessible filesystem-on-object representation · Certain object limitations o No support for spareness and hardlinks o Limited attribute/metadata support o No compression |

· Full-fidelity file system baseline & incremental replication to object

o Supports ADS, special files, symlinks, hardlinks, sparseness, POSIX/NT attributes, and encoding o Any file size and any path length · Fast incremental copies · Compact file system snapshot representation in native cloud · Object representation o Grouped by target basepath in policy configuration o Further grouped by Dataset ID, Global File ID |

Architecturally, SmartSync incorporates the following concepts:

| Concept | Description |

| Account | • References to systems that participate in jobs (PowerScale clusters, cloud hosts)

• Made up of a name, a URI and auth info |

| Dataset | • Abstraction of a filesystem snapshot; the thing we copy between systems

• Identified by Dataset IDs |

| Global File ID | • Conceptually a global LIN that references a specific file on a specific system |

| Policy | • Dataset creation policy creates a dataset

• Copy/Repeat Copy policies take an existing dataset and put it on another system • Policy execution can be linked and scheduled

|

| Push/Pull, Cascade/Reconnect

|

• Clusters syncing to each other in sequence (A>B>C)

• Clusters can skip baseline copy and directly perform incremental updates (A>C) • Clusters can both request and send datasets |

| Transfer resiliency

|

• Small errors don’t need to halt a policy’s progress |

Under the hood, SmartSync uses this concept of a data set, which is fundamentally an abstraction of a OneFS file system snapshot – albeit with some additional properties attached to it.

Each data set is identified by a unique ID. Plus, with this notion of data sets, OneFS can now also perform an A to B replication and an A to C replication – two replications of the same data set to two different targets. Plus with these new data sets, B and C can now also reference each other and perform incremental replication amongst themselves, assuming they have a common ancestor snapshot that they share.

A SmartSync data set creation policy takes snapshots and creates a data set from it. Additionally, there are also copy and repeat copy policies, which are the policies that are used to transfer that data set to another system. The execution of these two policy types can be linked and scheduled separately. So one schedule can be for data set creation, say to create a data set every hour on a particular path, and the other schedule for a tiered or different distribution system for the actual copy itself. For example, in order to copy hourly to a hot DR cluster in data center A, and also copy monthly to a deep archive cluster in data center B – all without increasing the proliferation of snapshots on the system, since they’re now able to be shared.

Additionally, SmartSync in 9.11 also introduces the foundational concept of a global file ID (GFID), which is essentially a global LIN that represents a specific file on a particular system. OneFS can now use this GFID, in combination with a data set, to reference a file anywhere and guarantee that it means the same thing across every cluster.

Security-wise, each SmartSync daemon has an identity certificate that acts as both a client and server certificate depending on the direction of the data movement. This identity certificate is signed by a non-public certificate authority. To establish trust between two clusters, they must have each other’s CAs. These CAs may be the same. Trust groups (daemons that may establish connections to each other) are formed by having shared CAs installed.

There are no usernames or passwords; authentication is authorization for V1. All cluster-to-cluster communication is performed via TLS-encrypted traffic. If absolutely necessary, encryption (but not authorization) can be disabled by setting a ‘NULL’ encryption cipher for specific use cases that require unencrypted traffic.

The SmartSync daemon supports checking certificate revocation status via the Online Certificate Status Protocol (OCSP). If the cluster is hardened and/or in FIPS-compliant mode, OCSP checking is forcibly enabled and set to the Strict stringency level, where any failure in OCSP processing results in a failed TLS handshake. Otherwise, OCSP checking can be totally disabled or set to a variety of values corresponding to desired behavior in cases where the responder is unavailable, the responder does not have information about the cert in question, and where information about the responder is missing entirely. Similarly, an override OCSP responder URI is configurable to support cases where preexisting certificates do not contain responder information.

SmartSync also supports a ‘strict hostname check’ option which mandates that the common name and/or subject alternative name fields of the peer certificate match the URI used to connect to that peer. This option, along with strict OCSP checking and disabling the null cipher option, are forcibly set when the cluster is operating in a hardened or FIPS-compliant mode.

For object storage connections, SmartSync uses ‘isi_cloud_api’ just as CloudPools does. As such, all considerations that apply to CloudPools also apply to SmartSync as well.

In the next article in this series, we’ll turn our attention to the core architecture and configuration of SmartSync backup-to-object.