In this next article in the OneFS software journal mirroring series, we will dig into SJM’s underpinnings and operation in a bit more depth.

With its debut in OneFS 9.11, the current focus of SJM is the all-flash F-series nodes containing either 61TB or 122TB QLC SSDs. In these cases, SJM dramatically improves the reliability of these dense drive platforms with journal fault tolerance. Specifically, it maintains a consistent copy of the primary node’s journal on a separate node. By automatically recovering the journal from this mirror, SJM is able to substantially reduce the node failure rate without the need for increased FEC protection overhead.

SJM is enabled by default for the applicable platforms on new clusters. So for clusters including F710 or F910 nodes with large QLC drives that ship with 9.11 installed, SJM will be automatically activated.

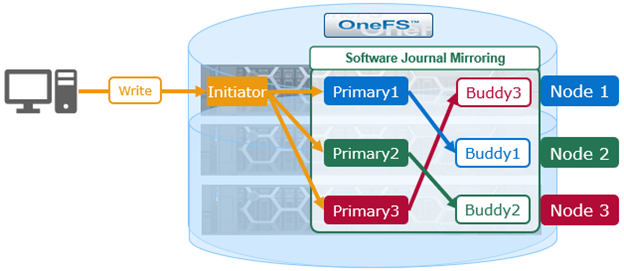

SJM adds a mirroring scheme, which provides the redundancy for the journal’s contents. This is where /ifs updates are sent to a node’s local, or primary, journal as usual. But they’re also synchronously replicated, or mirrored, to another node’s journal, too – referred to as the ‘buddy’.

Architecturally, SJM’s main components and associated lexicon are as follows:

| Item | Description |

| Primary | Node with a journal that is co-located with the data drives that the journal will flush to. |

| Buddy | Node with a journal that stores sufficient information about transactions on the primary to restore the contents of a primary node’s journal in the event of its failure. |

| Caller | Calling function that executes a transaction. Analogous to the initiator in the 2PC protocol. |

| Userspace journal library | Saves the backup, restores the backup, and dumps journal (primary and buddy). |

| Buddy reconfiguration system | Enables buddy reconfiguration and stores the mapping in buddy map via buddy updater. |

| Buddy mapping updater | Provides interfaces and protocol for updating buddy map. |

| Buddy map | Stores buddy map (primary <-> buddy). |

| Journal recovery subsystem | Facilitates journal recovery from buddy on primary journal loss. |

| Buddy map interface | Kernel interface for buddy map. |

| Mirroring subsystem | Mirrors global and local transactions. |

| JGN | Journal Generation Number, to identify versions and verify if two copies of a primary journal are consistent. |

| JGN interface | Journal Generation Number interface to update/read JGN. |

| NSB | Node state block, which stores JGN. |

| SB | Journal Superblock. |

| SyncForward | Mechanism to sync an out-of-date buddy journal with missed primary journal content additions & deletions. |

| SyncBack | Mechanism to reconstitute a blown primary journal from the mirrored information stored in the buddy journal. |

These components are organized into the following hierarchy and flow, split across kernel and user space:

A node’s primary journal is co-located with the data drives that it will flush to. In contrast, the buddy journal lives on a remote node and stores sufficient information about transactions on the primary, to allow it to restore the contents of a primary node’s journal in the event of its failure.

SyncForward is the mechanism by which an out of date Buddy journal is caught up with any Primary journal transactions that it might have missed. While SyncBack, or restore, allows a blown Primary journal to be reconstituted from the mirroring information stored in its Buddy journal.

SJM needs to be able to rapidly detect a number of failure scenarios and decide which is the appropriate recovery workflow to initiate. For example, a blown primary journal, where SJM must quickly determine whether the Buddy’s contents are complete, to allow a SyncBack to fully reconstruct a valid Primary journal. Versus whether to resort to a more costly node rebuild instead. Or, if the Buddy node disconnects briefly, which of a Primary journal’s changes should be replicated during a SyncForward, in order to bring the Buddy efficiently back into alignment.

SJM tags the transactions logged into the Primary journal, and their corresponding mirrors in the Buddy, with a monotonically increasing Journal Generation Number, or JGN.

The JGN represents the most recent & consistent copy of a primary node’s journal, and it’s incremented whenever the write status of the Buddy journal changes, which is tracked by the Primary via OneFS GMP group change updates.

In order to determine whether the Buddy journal’s contents are complete, the JGN needs to be available to the primary node when its primary journal is blown. So the JGN is stored in a Node State Block, or NSB, and saved on a quorum of the node’s data-drives. Therefore, upon loss of a Primary journal, the JGN in the node state block can be compared against the JGN in the Buddy to confirm its transaction mirroring is complete, before the SyncBack workflow is initiated.

A primary transaction exists on the node where data storage is being modified, and the corresponding buddy transaction is a hot, redundant duplicate of the primary information on a separate node. The SDPM journal storage on the F-series platforms is fast, and the pipe between nodes across the backend network is optimized for low-latency bulk data flow. And this allows the standard POSIX file model to transparently operate on the front-end protocols, which are blissfully aware of any journal jockeying that’s occurring behind the scenes.

The journal mirroring activity is continuous, and if the Primary loses contact with its Buddy, it will urgently seek out another Buddy and repeat the mirroring for each active transaction, to regain a fully mirrored journal config. If the reverse happens, and the Primary vanishes due to an adverse event like a local power loss or an unexpected reboot, the primary can reattach to its designated buddy and ensure that its own journal is consistent with the transactions that the Buddy has kept safely mirrored. This means that the buddy must reside on a different node than the primary. As such, it’s normal and expected for each primary node to also be operating as the buddy for a different node.

The prerequisite platform requirements for SJM support in 9.11, which are referred to as ‘SJM-capable nodes, are as follows:

Essentially, this is any F710 and F910’s with 61TB or 122TB SSDs which shipped with OneFS 9.10 or later are considered SJM-capable.

Note that there are a small number of F710 and F910s with 61TB drives out there which were shipped with OneFS 9.9 or earlier installed. These nodes must be re-imaged before they can use SJM. So they first need to be SmartFailed out, then USB reimaged to OneFS 9.10 or later. This is to allow the node’s SDPM journal device to be reformatted to include a second partition for the 16 GiB buddy journal allocation. However, this 16 GiB of space reserved for the buddy journal will not be used when SJM is disabled. The following table shows the maximum SDPM usage per journal type based on SJM enablement:

| Journal State | Primary journal | Buddy journal |

| SJM enabled | 16 GiB | 16 GiB |

| SJM disabled | 16 GiB | 0 GiB |

But to reiterate, the SJM-capable platforms which will ship with OneFS 9.11 installed, or those that shipped with OneFS 9.10, are ready to run SJM, and will form node pools of equivalent type.

While SJM is available upon upgrade commit to OneFS 9.11, it is not automatically activated. So for any F710 or F910 nodes with large QLC drives that were originally shipped with OneFS 9.10 installed, the cluster admin will need to manually enable SJM on any capable pools after their upgrade to 9.11.

Plus, if SJM is not activated, a CELOG alert will be raised, encouraging the customer to enable it, in order for the cluster to meet the reliability requirements. This CELOG alert will contain information about the administrative actions required to enable SJM.

Additionally, a pre-upgrade check is also included in OneFS 9.11 to prevent any existing cluster with nodes containing 61TB drives that were shipped with OneFS 9.9 or older installed, from upgrading directly to 9.11 – until these nodes have been USB-reimaged and their journals reformatted.