WebHDFS

Hortonworks developed an API to support operations such as create, rename or delete files and directories, open, read or write files, set permissions, etc based on standard REST functionalities called as WebHDFS. This is a great tool for applications running within the Hadoop cluster but there may be use cases where an external application needs to manipulate HDFS like it needs to create directories and write files to that directory or read the content of a file stored on HDFS. Webhdfs concept is based on HTTP operations like GT, PUT, POST and DELETE. Authentication can be based on user.name query parameter (as part of the HTTP query string) or if security is turned on then it relies on Kerberos.

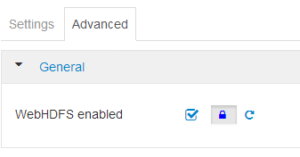

Web HDFS is enabled in a Hadoop cluster by defining the following property in hdfs-site.xml: Also can be chekced in Ambari UI page under HDFS service –>config

<property> <name>dfs.webhdfs.enabled</name> <value>true</value> <final>true</final> </property>

Ambari UI –> HDFS Service–> Config–General

Will use user hdfs-hdp265 for this further testing, initialize the hdfs-hdp265.

[root@hawkeye03 ~]# kinit -kt /etc/security/keytabs/hdfs.headless.keytab hdfs-hdp265 [root@hawkeye03 ~]# klist Ticket cache: FILE:/tmp/krb5cc_0 Default principal: hdfs-hdp265@KANAGAWA.DEMO Valid starting Expires Service principal 09/16/2018 19:01:36 09/17/2018 05:01:36 krbtgt/KANAGAWA.DEMO@KANAGAWA.DEMO renew until 09/23/2018 19:01:CURL

curl(1) itself knows nothing about Kerberos and will not interact neither with your credential cache nor your keytab file. It will delegate all calls to a GSS-API implementation which will do the magic for you. What magic depends on the library, Heimdal and MIT Kerberos.

Verify this with curl –version mentioning GSS-API and SPNEGO and with ldd linked against your MIT Kerberos version.

-

- Create a client keytab for the service principal with ktutil or mskutil

- Try to obtain a TGT with that client keytab by kinit -k -t <path-to-keytab> <principal-from-keytab>

- Verify with klist that you have a ticket cache

Environment is now ready to go:

-

- Export KRB5CCNAME=<some-non-default-path>

- Export KRB5_CLIENT_KTNAME=<path-to-keytab>

- Invoke curl –negotiate -u : <URL>

MIT Kerberos will detect that both environment variables are set, inspect them, automatically obtain a TGT with your keytab, request a service ticket and pass to curl. You are done.

WebHDFS Examples

1. Check home directory

[root@hawkeye03 ~]# curl --negotiate -w -X -u : "http://isilon40g.kanagawa.demo:8082/webhdfs/v1?op=GETHOMEDIRECTORY" { "Path" : "/user/hdfs-hdp265" } -X[root@hawkeye03 ~]#

2. Check Directory status

[root@hawkeye03 ~]# curl --negotiate -u : -X GET "http://isilon40g.kanagawa.demo:8082/webhdfs/v1/hdp?op=LISTSTATUS" { "FileStatuses" : { "FileStatus" : [ { "accessTime" : 1536824856850, "blockSize" : 0, "childrenNum" : -1, "fileId" : 4443865584, "group" : "hadoop", "length" : 0, "modificationTime" : 1536824856850, "owner" : "root", "pathSuffix" : "apps", "permission" : "755", "replication" : 0, "type" : "DIRECTORY" } ] } } [root@hawkeye03 ~]#3. Create a directory

[root@hawkeye03 ~]# curl --negotiate -u : -X PUT "http://isilon40g.kanagawa.demo:8082/webhdfs/v1/tmp/webhdfs_test_dir?op=MKDIRS" { "boolean" : true } [root@hawkeye03 ~]# hadoop fs -ls /tmp | grep webhdfs drwxr-xr-x - root hdfs 0 2018-09-16 19:09 /tmp/webhdfs_test_dir [root@hawkeye03 ~]#

4. Create a File :: With OneFS 8.1.2 files operation can be performed with single REST API call.

[root@hawkeye03 ~]# hadoop fs -ls -R /tmp/webhdfs_test_dir/ [root@hawkeye03 ~]# [root@hawkeye03 ~]# curl --negotiate -u : -X PUT "http://isilon40g.kanagawa.demo:8082/webhdfs/v1/tmp/webhdfs_test_dir/webhdfs-test_file?op=CREATE" [root@hawkeye03 ~]# curl --negotiate -u : -X PUT "http://isilon40g.kanagawa.demo:8082/webhdfs/v1/tmp/webhdfs_test_dir/webhdfs-test_file_2?op=CREATE" [root@hawkeye03 ~]# [root@hawkeye03 ~]# hadoop fs -ls -R /tmp/webhdfs_test_dir/ -rwxr-xr-x 3 root hdfs 0 2018-09-16 19:15 /tmp/webhdfs_test_dir/webhdfs-test_file -rwxr-xr-x 3 root hdfs 0 2018-09-16 19:15 /tmp/webhdfs_test_dir/webhdfs-test_file_2 [root@hawkeye03 ~]#

5. Upload sample file

[root@hawkeye03 ~]# echo "WebHDFS Sample Test File" > WebHDFS.txt [root@hawkeye03 ~]# curl --negotiate -T WebHDFS.txt -u : "http://isilon40g.kanagawa.demo:8082/webhdfs/v1/tmp/webhdfs_test_dir/WebHDFS.txt?op=CREATE&overwrite=false" [root@hawkeye03 ~]# hadoop fs -ls -R /tmp/webhdfs_test_dir/ -rwxr-xr-x 3 root hdfs 0 2018-09-16 19:41 /tmp/webhdfs_test_dir/WebHDFS.txt

6. Open the read a file :: With OneFS 8.1.2 files operation can be performed with single REST API call.

[root@hawkeye03 ~]# curl --negotiate -i -L -u : "http://isilon40g.kanagawa.demo:8082/webhdfs/v1/tmp/webhdfs_test_dir/WebHDFS_read.txt?user.name=hdfs-hdp265&op=OPEN" HTTP/1.1 307 Temporary Redirect Date: Mon, 17 Sep 2018 08:18:45 GMT Server: Apache/2.4.29 (FreeBSD) OpenSSL/1.0.2o-fips mod_fastcgi/mod_fastcgi-SNAP-0910052141 Location: http://172.16.59.102:8082/webhdfs/v1/tmp/webhdfs_test_dir/WebHDFS_read.txt?user.name=hdfs-hdp265&op=OPEN&datanode=true Content-Length: 0 Content-Type: application/octet-stream HTTP/1.1 200 OK Date: Mon, 17 Sep 2018 08:18:45 GMT Server: Apache/2.4.29 (FreeBSD) OpenSSL/1.0.2o-fips mod_fastcgi/mod_fastcgi-SNAP-0910052141 Content-Length: 30 Content-Type: application/octet-stream Sample WebHDFS read test file

or

[root@hawkeye03 ~]# curl --negotiate -L -u : "http://isilon40g.kanagawa.demo:8082/webhdfs/v1/tmp/webhdfs_test_dir/WebHDFS_read.txt?op=OPEN&datanode=true" Sample WebHDFS read test file [root@hawkeye03 ~]#

7. Rename DIRECTORY

[root@hawkeye03 ~]# curl --negotiate -u : -X PUT "http://isilon40g.kanagawa.demo:8082/webhdfs/v1/tmp/webhdfs_test_dir?op=RENAME&destination=/tmp/webhdfs_test_dir_renamed" { "boolean" : true } [root@hawkeye03 ~]# hadoop fs -ls /tmp/ | grep webhdfs drwxr-xr-x - root hdfs 0 2018-09-16 19:48 /tmp/webhdfs_test_dir_renamed

8. Delete directory :: Directory should be empty to delete

[root@hawkeye03 ~]# curl --negotiate -u : -X DELETE "http://isilon40g.kanagawa.demo:8082/webhdfs/v1/tmp/webhdfs_test_dir_renamed?op=DELETE" { "RemoteException" : { "exception" : "PathIsNotEmptyDirectoryException", "javaClassName" : "org.apache.hadoop.fs.PathIsNotEmptyDirectoryException", "message" : "Directory is not empty." } } [root@hawkeye03 ~]#

Once the directory contents are removed, it can be deleted

[root@hawkeye03 ~]# curl --negotiate -u : -X DELETE "http://isilon40g.kanagawa.demo:8082/webhdfs/v1/tmp/webhdfs_test_dir_renamed?op=DELETE" { "boolean" : true } [root@hawkeye03 ~]# hadoop fs -ls /tmp | grep webhdfs [root@hawkeye03 ~]#Summary

WebHDFS provides a simple, standard way to execute Hadoop filesystem operations by an external client that does not necessarily run on the Hadoop cluster itself. The requirement for WebHDFS is that the client needs to have a direct connection to namenode and datanodes via the predefined ports. Hadoop HDFS over HTTP – that was inspired by HDFS Proxy – addresses these limitations by providing a proxy layer based on preconfigured Tomcat bundle; it is interoperable with WebHDFS API but does not require the firewall ports to be open for the client.