Another security enhancement that OneFS 9.5 brings to the table is the ability to configure 1GbE NIC ports dedicated to cluster management on the PowerScale F900, F600, F200 all-flash storage nodes and P100 and B100 accelerators. Since these platforms’ release, customers have been requesting the ability to activate the 1GbE NIC ports so that the node management activity and front end protocol traffic could be separated on physically distinct interfaces.

For background, the F600 and F900 have shipped with a quad port 1GbE rNDC (rack Converged Network Daughter Card) adapter since their introduction. However, these 1GbE ports were non-functional and unsupported in OneFS releases prior to 9.5. As such, the node management and front-end traffic was co-mingled on the front-end interface.

In OneFS 9.5 and later, 1GbE network ports are now supported on all of the PowerScale PowerEdge based platforms for the purposes of node management, and physically separate from the other network interfaces. Specifically, this enhancement applies to the F900, F600, F200 all-flash nodes, and P100 and B100 accelerators.

Under the hood, OneFS has been updated to recognize the 1GbE rNDC NIC ports as usable for a management interface. Note that the focus of this enhancement is on factory enablement and support for existing F600 customers that have that the unused 1GbE rNDC hardware. This functionality has also been back-ported to OneFS 9.4.0.3 and later RUPs. Since the introduction of this feature, there have been several requests raised about field upgrades, but that use case is separate and will be addressed in a later release via scripts, updates of node receipts, procedures, etc.

Architecturally, barring some device driver and accounting work, no substantial changes were required to the underlying OneFS or platform architecture in order to implement this feature. This means that, in addition to activating the rNDC, OneFS now supports the relocated front-end NIC in PCI slots 2 or 3 for the F200, B100, and P100.

OneFS 9.5 and later recognizes the 1GbE rNDC as usable for the management interface in the OneFS Wizard, in the same way it always has for the H-series and A-series chassis-based nodes.

All four ports in the 1GbE NIC are active, and, for the Broadcom board, the interfaces are initialized and reported as bge0, bge1, bge2, and bge3.

The ‘pciconf’ CLI utility can be used to determine whether the rNDC NIC is present in a node. If it is, a variety of identification and configuration details are displayed. For example, the following output from a Broadcom rNDC NIC in an F200 node:

# pciconf -lvV pci0:24:0:0 bge2@pci0:24:0:0: class=0x020000 card=0x1f5b1028 chip=0x165f14e4 rev=0x00 hdr=0x00 class = network subclass = ethernet VPD ident = ‘Broadcom NetXtreme Gigabit Ethernet’ VPD ro PN = ‘BCM95720’ VPD ro MN = ‘1028’ VPD ro V0 = ‘FFV7.2.14’ VPD ro V1 = ‘DSV1028VPDR.VER1.0’ VPD ro V2 = ‘NPY2’ VPD ro V3 = ‘PMT1’ VPD ro V4 = ‘NMVBroadcom Corp’ VPD ro V5 = ‘DTINIC’ VPD ro V6 = ‘DCM1001008d452101000d45’

The ‘ifconfig’ CLI utility can be used to determine the specific IP/interface mapping on the Broadcom rNDC interface. For example:

# ifconfig bge0 TME-1: bge0: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 1500 TME-1: ether 00:60:16:9e:X:X TME-1: inet 10.11.12.13 netmask 0xffffff00 broadcast 10.11.12.255 zone 1 TME-1: inet 10.11.12.13 netmask 0xffffff00 broadcast 10.11.12.255 zone 0 TME-1: media: Ethernet autoselect (1000baseT <full-duplex>) TME-1: status: active

In the above output, the first IP address of the management interface’s pool is bound to ‘bge0’, the first port on the Broadcom rNDC NIC.

The ‘isi network pools’ CLI command can be used to determine the corresponding interface. Within the system zone, the management interface is allocated an address from the configured IP range within its associated interface pool. For example:

# isi network pools list ID SC Zone IP Ranges Allocation Method --------------------------------------------------------------------------------------------------------- groupnet0.mgt.mgt cluster_mgt_isln.com 10.11.12.13-10.11.12.20 static # isi network pools view groupnet0.mgt.mgt | grep -i ifaces Ifaces: 1:mgmt-1, 2:mgmt-1, 3:mgmt-1, 4:mgmt-1, 5:mgmt-1

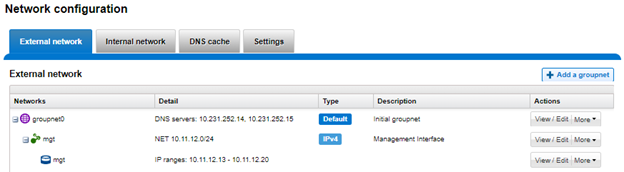

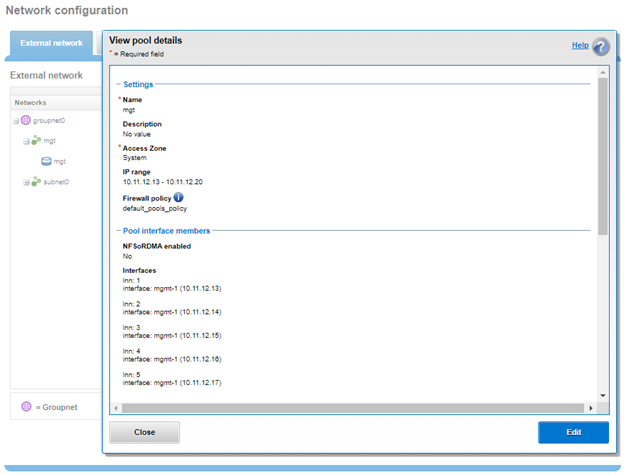

Or from the WebUI, under Cluster management >Network configuration > External network:

Drilling down into the ’mgt’ pool details show the 1GbE management interfaces as the pool interface members:

Note that the 1GbE rNDC network ports are solely intended as cluster management interfaces. As such, they are not supported for use with regular front-end data traffic.

While the F900 and F600 nodes already ship with a four port 1GbE rNDC NIC installed. However, the F200, B100, and P100 platform configurations have been updated to also include a quad port 1GbE rNDC card, and these new configurations have been shipping by default since January 2023. This necessitated relocating the front end network’s 25GbE NIC (Mellanox CX4) to PCI slot 2 in the motherboard. Additionally, the OneFS updates needed for this feature has also allowed the F200 platform to now be offered with a 100GbE option, too. The 100GbE option utilizes a Mellanox CX6 NIC in place of the CX4 in slot 2.

With this 1GbE management interface enhancement, the same quad-port rNDC card (typically the Broadcom 5720) that has been shipped in the F900 and F600 since their introduction, is now included in the F200, B100 and P100 nodes as well. All four 1GbE rNDC ports are enabled and active under OneFS 9.5 and later, too.

Node port ordering continues to follow the standard, increasing numerically from left to right. However, be aware that the port labels are not visible externally since they are obscured by the enclosure’s sheet metal.

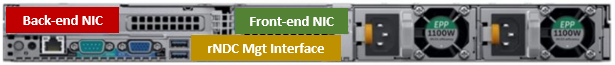

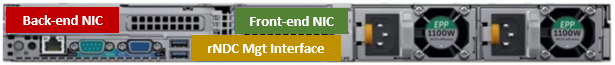

The following back-of-chassis hardware images show the new placements of the NICs in the various F-series and accelerator platforms:

PowerScale F600

PowerScale F900

For both the F600 and F900, the NIC placement remains unchanged, since these nodes have always shipped with the 1GbE quad port in the rNDC slot since their launch.

PowerScale F200

The F200 sees its front-end NIC moved to slot 3, freeing up the rNDC slot for the quad-port 1GbE Broadcom 5720.

PowerScale B100

Since the B100 backup accelerator has a fibre-channel card in slot 2, it sees its front-end NIC moved to slot 3, freeing up the rNDC slot for the quad-port 1GbE Broadcom 5720.

PowerScale P100

Finally, the P100 accelerator sees its front-end NIC moved to slot 3, freeing up the rNDC slot for the quad-port 1GbE Broadcom 5720.

Note that, while there is currently no field hardware upgrade process for adding rNDC cards to legacy F200 nodes or B100 and P100 accelerators, this will likely be addressed in a future release.